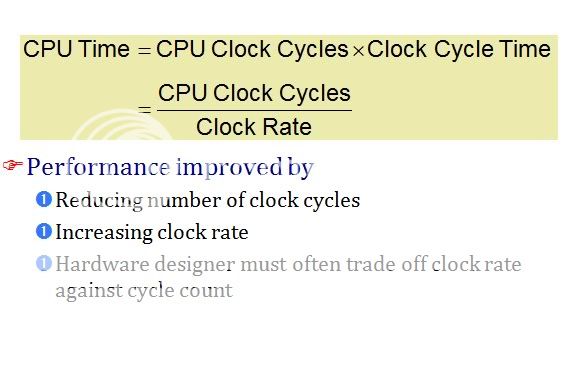

Relation between CPU's clock speed and clock period

In this slide, things looks a little off to me. Clock cycle time or clock period, is already time required per clock cycle. Question is, does the word Clock Rate makes sense?

It also says, Hardware designer must often trade off clock rate against cycle count. But, they are inversely related. If one increases Clock speed, the clock period(time for per clock cycle) will reduce automatically. Why there will be a choice?

Or am I missing something?

Answer

First things first, slides aren't always the best way to discuss technical issues. Don't take any slide as gospel. There's a huge amount of handwaving going on to support gigantic claims with so little evidence.

That said, there are tradeoffs:

- faster clocks is usually better: get more integer or floating point operations done per second

- but if the faster clock doesn't line up well with external memory clocks, some of those cycles might be wasted

- slower clocks might draw less power

- faster clocks allow an operating system kernel to get more work done with every wakeup and return to sleep faster, thus they might draw less power

- faster clocks might mean some operations take more clock cycles to actually execute (think of the supremely deep pipelines of the Pentium IV -- branch mis-predictions were very costly -- despite the faster clock cycle than the Pentium III or Pentium M, in the real world, speeds were very similar for the two processor types.)